[edk2-devel] [PATCH v9] IntelFsp2Pkg: Add Config Editor tool support

Chiu, Chasel

chasel.chiu at intel.com

Fri Jun 25 02:31:13 UTC 2021

Hi Tung Lun,

One small update needed, please add Bugzilla link in commit message for reference.

Thanks,

Chasel

> -----Original Message-----

> From: devel at edk2.groups.io <devel at edk2.groups.io> On Behalf Of Chiu, Chasel

> Sent: Friday, June 25, 2021 10:28 AM

> To: Loo, Tung Lun <tung.lun.loo at intel.com>; devel at edk2.groups.io

> Cc: Ma, Maurice <maurice.ma at intel.com>; Desimone, Nathaniel L

> <nathaniel.l.desimone at intel.com>; Zeng, Star <star.zeng at intel.com>

> Subject: Re: [edk2-devel] [PATCH v9] IntelFsp2Pkg: Add Config Editor tool

> support

>

>

> Reviewed-by: Chasel Chiu <chasel.chiu at intel.com>

>

> > -----Original Message-----

> > From: Loo, Tung Lun <tung.lun.loo at intel.com>

> > Sent: Friday, June 25, 2021 9:09 AM

> > To: devel at edk2.groups.io

> > Cc: Loo, Tung Lun <tung.lun.loo at intel.com>; Ma, Maurice

> > <maurice.ma at intel.com>; Desimone, Nathaniel L

> > <nathaniel.l.desimone at intel.com>; Zeng, Star <star.zeng at intel.com>; Chiu,

> > Chasel <chasel.chiu at intel.com>

> > Subject: [PATCH v9] IntelFsp2Pkg: Add Config Editor tool support

> >

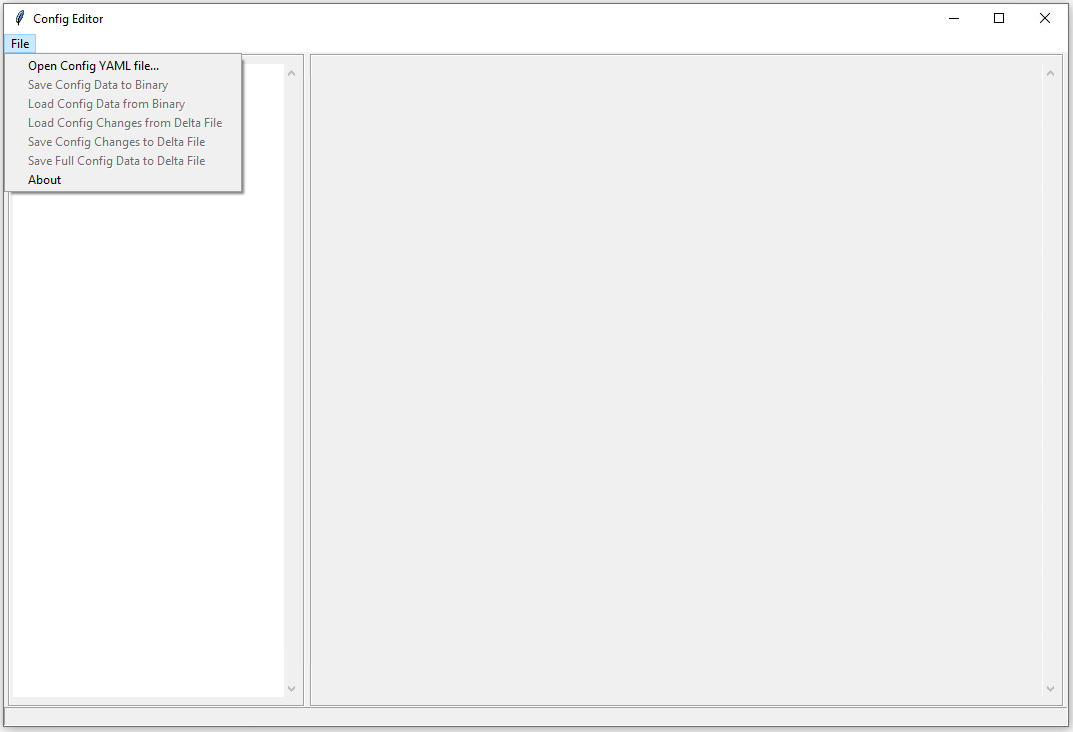

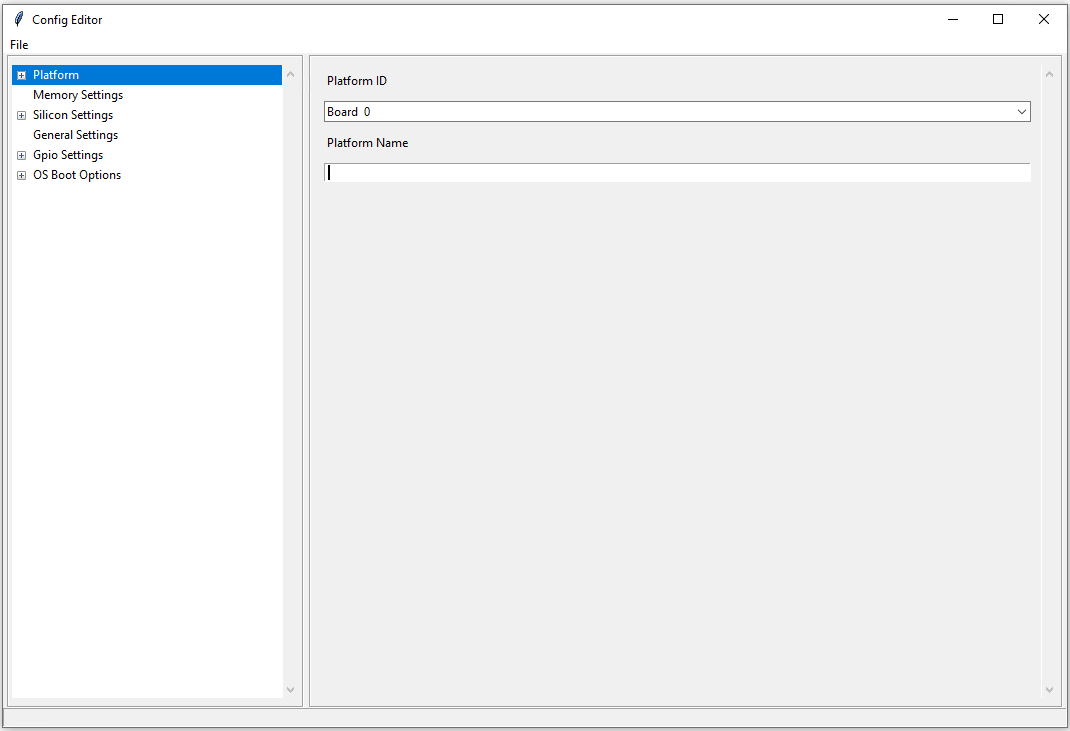

> > This is a GUI interface that can be used by users who

> > would like to change configuration settings directly

> > from the interface without having to modify the source.

> >

> > This tool depends on Python GUI tool kit Tkinter.

> > It runs on both Windows and Linux.

> >

> > The user needs to load the YAML file along with DLT file

> > for a specific board into the ConfigEditor, change the desired

> > configuration values. Finally, generate a new configuration delta

> > file or a config binary blob for the newly changed values to take

> > effect. These will be the inputs to the merge tool or the stitch

> > tool so that new config changes can be merged and stitched into

> > the final configuration blob.

> >

> > This tool also supports binary update directly and display FSP

> > information. It is also backward compatible for BSF file format.

> >

> > Running Configuration Editor:

> > python ConfigEditor.py

> >

> > Co-authored-by: Maurice Ma <maurice.ma at intel.com>

> > Cc: Maurice Ma <maurice.ma at intel.com>

> > Cc: Nate DeSimone <nathaniel.l.desimone at intel.com>

> > Cc: Star Zeng <star.zeng at intel.com>

> > Cc: Chasel Chiu <chasel.chiu at intel.com>

> > Signed-off-by: Loo Tung Lun <tung.lun.loo at intel.com>

> > ---

> > IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py | 504

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> > +++++++++++++++++++++++++++++++++++++++++++++++++

> > IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py | 1499

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> > +++++++++++

> > IntelFsp2Pkg/Tools/ConfigEditor/GenYamlCfg.py | 2252

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> > +++++++++++++++++

> > IntelFsp2Pkg/Tools/ConfigEditor/SingleSign.py | 324

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> > ++++++++++++++++++++++++++++++++++++++++++++++++++

> > IntelFsp2Pkg/Tools/FspDscBsf2Yaml.py | 376

> > ++++++++++++++++++++++++++++-----------------------------------------------------

> ---

> > --------------------------------------------------

> > IntelFsp2Pkg/Tools/FspGenCfgData.py | 2637

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> >

> +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

> > ++++++++++++++++++++++++

> > IntelFsp2Pkg/Tools/UserManuals/ConfigEditorUserManual.md | 45

> > ++++++++++++++++

> > 7 files changed, 7340 insertions(+), 297 deletions(-)

> >

> > diff --git a/IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py

> > b/IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py

> > new file mode 100644

> > index 0000000000..1229279116

> > --- /dev/null

> > +++ b/IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py

> > @@ -0,0 +1,504 @@

> > +#!/usr/bin/env python

> >

> > +# @ CommonUtility.py

> >

> > +# Common utility script

> >

> > +#

> >

> > +# Copyright (c) 2016 - 2021, Intel Corporation. All rights reserved.<BR>

> >

> > +# SPDX-License-Identifier: BSD-2-Clause-Patent

> >

> > +#

> >

> > +##

> >

> > +

> >

> > +import os

> >

> > +import sys

> >

> > +import shutil

> >

> > +import subprocess

> >

> > +import string

> >

> > +from ctypes import ARRAY, c_char, c_uint16, c_uint32, \

> >

> > + c_uint8, Structure, sizeof

> >

> > +from importlib.machinery import SourceFileLoader

> >

> > +from SingleSign import single_sign_gen_pub_key

> >

> > +

> >

> > +

> >

> > +# Key types defined should match with cryptolib.h

> >

> > +PUB_KEY_TYPE = {

> >

> > + "RSA": 1,

> >

> > + "ECC": 2,

> >

> > + "DSA": 3,

> >

> > + }

> >

> > +

> >

> > +# Signing type schemes defined should match with cryptolib.h

> >

> > +SIGN_TYPE_SCHEME = {

> >

> > + "RSA_PKCS1": 1,

> >

> > + "RSA_PSS": 2,

> >

> > + "ECC": 3,

> >

> > + "DSA": 4,

> >

> > + }

> >

> > +

> >

> > +# Hash values defined should match with cryptolib.h

> >

> > +HASH_TYPE_VALUE = {

> >

> > + "SHA2_256": 1,

> >

> > + "SHA2_384": 2,

> >

> > + "SHA2_512": 3,

> >

> > + "SM3_256": 4,

> >

> > + }

> >

> > +

> >

> > +# Hash values defined should match with cryptolib.h

> >

> > +HASH_VAL_STRING = dict(map(reversed, HASH_TYPE_VALUE.items()))

> >

> > +

> >

> > +AUTH_TYPE_HASH_VALUE = {

> >

> > + "SHA2_256": 1,

> >

> > + "SHA2_384": 2,

> >

> > + "SHA2_512": 3,

> >

> > + "SM3_256": 4,

> >

> > + "RSA2048SHA256": 1,

> >

> > + "RSA3072SHA384": 2,

> >

> > + }

> >

> > +

> >

> > +HASH_DIGEST_SIZE = {

> >

> > + "SHA2_256": 32,

> >

> > + "SHA2_384": 48,

> >

> > + "SHA2_512": 64,

> >

> > + "SM3_256": 32,

> >

> > + }

> >

> > +

> >

> > +

> >

> > +class PUB_KEY_HDR (Structure):

> >

> > + _pack_ = 1

> >

> > + _fields_ = [

> >

> > + ('Identifier', ARRAY(c_char, 4)), # signature ('P', 'U', 'B', 'K')

> >

> > + ('KeySize', c_uint16), # Length of Public Key

> >

> > + ('KeyType', c_uint8), # RSA or ECC

> >

> > + ('Reserved', ARRAY(c_uint8, 1)),

> >

> > + ('KeyData', ARRAY(c_uint8, 0)),

> >

> > + ]

> >

> > +

> >

> > + def __init__(self):

> >

> > + self.Identifier = b'PUBK'

> >

> > +

> >

> > +

> >

> > +class SIGNATURE_HDR (Structure):

> >

> > + _pack_ = 1

> >

> > + _fields_ = [

> >

> > + ('Identifier', ARRAY(c_char, 4)),

> >

> > + ('SigSize', c_uint16),

> >

> > + ('SigType', c_uint8),

> >

> > + ('HashAlg', c_uint8),

> >

> > + ('Signature', ARRAY(c_uint8, 0)),

> >

> > + ]

> >

> > +

> >

> > + def __init__(self):

> >

> > + self.Identifier = b'SIGN'

> >

> > +

> >

> > +

> >

> > +class LZ_HEADER(Structure):

> >

> > + _pack_ = 1

> >

> > + _fields_ = [

> >

> > + ('signature', ARRAY(c_char, 4)),

> >

> > + ('compressed_len', c_uint32),

> >

> > + ('length', c_uint32),

> >

> > + ('version', c_uint16),

> >

> > + ('svn', c_uint8),

> >

> > + ('attribute', c_uint8)

> >

> > + ]

> >

> > + _compress_alg = {

> >

> > + b'LZDM': 'Dummy',

> >

> > + b'LZ4 ': 'Lz4',

> >

> > + b'LZMA': 'Lzma',

> >

> > + }

> >

> > +

> >

> > +

> >

> > +def print_bytes(data, indent=0, offset=0, show_ascii=False):

> >

> > + bytes_per_line = 16

> >

> > + printable = ' ' + string.ascii_letters + string.digits + string.punctuation

> >

> > + str_fmt = '{:s}{:04x}: {:%ds} {:s}' % (bytes_per_line * 3)

> >

> > + bytes_per_line

> >

> > + data_array = bytearray(data)

> >

> > + for idx in range(0, len(data_array), bytes_per_line):

> >

> > + hex_str = ' '.join(

> >

> > + '%02X' % val for val in data_array[idx:idx + bytes_per_line])

> >

> > + asc_str = ''.join('%c' % (val if (chr(val) in printable) else '.')

> >

> > + for val in data_array[idx:idx + bytes_per_line])

> >

> > + print(str_fmt.format(

> >

> > + indent * ' ',

> >

> > + offset + idx, hex_str,

> >

> > + ' ' + asc_str if show_ascii else ''))

> >

> > +

> >

> > +

> >

> > +def get_bits_from_bytes(bytes, start, length):

> >

> > + if length == 0:

> >

> > + return 0

> >

> > + byte_start = (start) // 8

> >

> > + byte_end = (start + length - 1) // 8

> >

> > + bit_start = start & 7

> >

> > + mask = (1 << length) - 1

> >

> > + val = bytes_to_value(bytes[byte_start:byte_end + 1])

> >

> > + val = (val >> bit_start) & mask

> >

> > + return val

> >

> > +

> >

> > +

> >

> > +def set_bits_to_bytes(bytes, start, length, bvalue):

> >

> > + if length == 0:

> >

> > + return

> >

> > + byte_start = (start) // 8

> >

> > + byte_end = (start + length - 1) // 8

> >

> > + bit_start = start & 7

> >

> > + mask = (1 << length) - 1

> >

> > + val = bytes_to_value(bytes[byte_start:byte_end + 1])

> >

> > + val &= ~(mask << bit_start)

> >

> > + val |= ((bvalue & mask) << bit_start)

> >

> > + bytes[byte_start:byte_end+1] = value_to_bytearray(

> >

> > + val,

> >

> > + byte_end + 1 - byte_start)

> >

> > +

> >

> > +

> >

> > +def value_to_bytes(value, length):

> >

> > + return value.to_bytes(length, 'little')

> >

> > +

> >

> > +

> >

> > +def bytes_to_value(bytes):

> >

> > + return int.from_bytes(bytes, 'little')

> >

> > +

> >

> > +

> >

> > +def value_to_bytearray(value, length):

> >

> > + return bytearray(value_to_bytes(value, length))

> >

> > +

> >

> > +# def value_to_bytearray (value, length):

> >

> > + return bytearray(value_to_bytes(value, length))

> >

> > +

> >

> > +

> >

> > +def get_aligned_value(value, alignment=4):

> >

> > + if alignment != (1 << (alignment.bit_length() - 1)):

> >

> > + raise Exception(

> >

> > + 'Alignment (0x%x) should to be power of 2 !' % alignment)

> >

> > + value = (value + (alignment - 1)) & ~(alignment - 1)

> >

> > + return value

> >

> > +

> >

> > +

> >

> > +def get_padding_length(data_len, alignment=4):

> >

> > + new_data_len = get_aligned_value(data_len, alignment)

> >

> > + return new_data_len - data_len

> >

> > +

> >

> > +

> >

> > +def get_file_data(file, mode='rb'):

> >

> > + return open(file, mode).read()

> >

> > +

> >

> > +

> >

> > +def gen_file_from_object(file, object):

> >

> > + open(file, 'wb').write(object)

> >

> > +

> >

> > +

> >

> > +def gen_file_with_size(file, size):

> >

> > + open(file, 'wb').write(b'\xFF' * size)

> >

> > +

> >

> > +

> >

> > +def check_files_exist(base_name_list, dir='', ext=''):

> >

> > + for each in base_name_list:

> >

> > + if not os.path.exists(os.path.join(dir, each + ext)):

> >

> > + return False

> >

> > + return True

> >

> > +

> >

> > +

> >

> > +def load_source(name, filepath):

> >

> > + mod = SourceFileLoader(name, filepath).load_module()

> >

> > + return mod

> >

> > +

> >

> > +

> >

> > +def get_openssl_path():

> >

> > + if os.name == 'nt':

> >

> > + if 'OPENSSL_PATH' not in os.environ:

> >

> > + openssl_dir = "C:\\Openssl\\bin\\"

> >

> > + if os.path.exists(openssl_dir):

> >

> > + os.environ['OPENSSL_PATH'] = openssl_dir

> >

> > + else:

> >

> > + os.environ['OPENSSL_PATH'] = "C:\\Openssl\\"

> >

> > + if 'OPENSSL_CONF' not in os.environ:

> >

> > + openssl_cfg = "C:\\Openssl\\openssl.cfg"

> >

> > + if os.path.exists(openssl_cfg):

> >

> > + os.environ['OPENSSL_CONF'] = openssl_cfg

> >

> > + openssl = os.path.join(

> >

> > + os.environ.get('OPENSSL_PATH', ''),

> >

> > + 'openssl.exe')

> >

> > + else:

> >

> > + # Get openssl path for Linux cases

> >

> > + openssl = shutil.which('openssl')

> >

> > +

> >

> > + return openssl

> >

> > +

> >

> > +

> >

> > +def run_process(arg_list, print_cmd=False, capture_out=False):

> >

> > + sys.stdout.flush()

> >

> > + if os.name == 'nt' and os.path.splitext(arg_list[0])[1] == '' and \

> >

> > + os.path.exists(arg_list[0] + '.exe'):

> >

> > + arg_list[0] += '.exe'

> >

> > + if print_cmd:

> >

> > + print(' '.join(arg_list))

> >

> > +

> >

> > + exc = None

> >

> > + result = 0

> >

> > + output = ''

> >

> > + try:

> >

> > + if capture_out:

> >

> > + output = subprocess.check_output(arg_list).decode()

> >

> > + else:

> >

> > + result = subprocess.call(arg_list)

> >

> > + except Exception as ex:

> >

> > + result = 1

> >

> > + exc = ex

> >

> > +

> >

> > + if result:

> >

> > + if not print_cmd:

> >

> > + print('Error in running process:\n %s' % ' '.join(arg_list))

> >

> > + if exc is None:

> >

> > + sys.exit(1)

> >

> > + else:

> >

> > + raise exc

> >

> > +

> >

> > + return output

> >

> > +

> >

> > +

> >

> > +# Adjust hash type algorithm based on Public key file

> >

> > +def adjust_hash_type(pub_key_file):

> >

> > + key_type = get_key_type(pub_key_file)

> >

> > + if key_type == 'RSA2048':

> >

> > + hash_type = 'SHA2_256'

> >

> > + elif key_type == 'RSA3072':

> >

> > + hash_type = 'SHA2_384'

> >

> > + else:

> >

> > + hash_type = None

> >

> > +

> >

> > + return hash_type

> >

> > +

> >

> > +

> >

> > +def rsa_sign_file(

> >

> > + priv_key, pub_key, hash_type, sign_scheme,

> >

> > + in_file, out_file, inc_dat=False, inc_key=False):

> >

> > +

> >

> > + bins = bytearray()

> >

> > + if inc_dat:

> >

> > + bins.extend(get_file_data(in_file))

> >

> > +

> >

> > +

> >

> > +# def single_sign_file(priv_key, hash_type, sign_scheme, in_file, out_file):

> >

> > +

> >

> > + out_data = get_file_data(out_file)

> >

> > +

> >

> > + sign = SIGNATURE_HDR()

> >

> > + sign.SigSize = len(out_data)

> >

> > + sign.SigType = SIGN_TYPE_SCHEME[sign_scheme]

> >

> > + sign.HashAlg = HASH_TYPE_VALUE[hash_type]

> >

> > +

> >

> > + bins.extend(bytearray(sign) + out_data)

> >

> > + if inc_key:

> >

> > + key = gen_pub_key(priv_key, pub_key)

> >

> > + bins.extend(key)

> >

> > +

> >

> > + if len(bins) != len(out_data):

> >

> > + gen_file_from_object(out_file, bins)

> >

> > +

> >

> > +

> >

> > +def get_key_type(in_key):

> >

> > +

> >

> > + # Check in_key is file or key Id

> >

> > + if not os.path.exists(in_key):

> >

> > + key = bytearray(gen_pub_key(in_key))

> >

> > + else:

> >

> > + # Check for public key in binary format.

> >

> > + key = bytearray(get_file_data(in_key))

> >

> > +

> >

> > + pub_key_hdr = PUB_KEY_HDR.from_buffer(key)

> >

> > + if pub_key_hdr.Identifier != b'PUBK':

> >

> > + pub_key = gen_pub_key(in_key)

> >

> > + pub_key_hdr = PUB_KEY_HDR.from_buffer(pub_key)

> >

> > +

> >

> > + key_type = next(

> >

> > + (key for key,

> >

> > + value in PUB_KEY_TYPE.items() if value == pub_key_hdr.KeyType))

> >

> > + return '%s%d' % (key_type, (pub_key_hdr.KeySize - 4) * 8)

> >

> > +

> >

> > +

> >

> > +def get_auth_hash_type(key_type, sign_scheme):

> >

> > + if key_type == "RSA2048" and sign_scheme == "RSA_PKCS1":

> >

> > + hash_type = 'SHA2_256'

> >

> > + auth_type = 'RSA2048_PKCS1_SHA2_256'

> >

> > + elif key_type == "RSA3072" and sign_scheme == "RSA_PKCS1":

> >

> > + hash_type = 'SHA2_384'

> >

> > + auth_type = 'RSA3072_PKCS1_SHA2_384'

> >

> > + elif key_type == "RSA2048" and sign_scheme == "RSA_PSS":

> >

> > + hash_type = 'SHA2_256'

> >

> > + auth_type = 'RSA2048_PSS_SHA2_256'

> >

> > + elif key_type == "RSA3072" and sign_scheme == "RSA_PSS":

> >

> > + hash_type = 'SHA2_384'

> >

> > + auth_type = 'RSA3072_PSS_SHA2_384'

> >

> > + else:

> >

> > + hash_type = ''

> >

> > + auth_type = ''

> >

> > + return auth_type, hash_type

> >

> > +

> >

> > +

> >

> > +# def single_sign_gen_pub_key(in_key, pub_key_file=None):

> >

> > +

> >

> > +

> >

> > +def gen_pub_key(in_key, pub_key=None):

> >

> > +

> >

> > + keydata = single_sign_gen_pub_key(in_key, pub_key)

> >

> > +

> >

> > + publickey = PUB_KEY_HDR()

> >

> > + publickey.KeySize = len(keydata)

> >

> > + publickey.KeyType = PUB_KEY_TYPE['RSA']

> >

> > +

> >

> > + key = bytearray(publickey) + keydata

> >

> > +

> >

> > + if pub_key:

> >

> > + gen_file_from_object(pub_key, key)

> >

> > +

> >

> > + return key

> >

> > +

> >

> > +

> >

> > +def decompress(in_file, out_file, tool_dir=''):

> >

> > + if not os.path.isfile(in_file):

> >

> > + raise Exception("Invalid input file '%s' !" % in_file)

> >

> > +

> >

> > + # Remove the Lz Header

> >

> > + fi = open(in_file, 'rb')

> >

> > + di = bytearray(fi.read())

> >

> > + fi.close()

> >

> > +

> >

> > + lz_hdr = LZ_HEADER.from_buffer(di)

> >

> > + offset = sizeof(lz_hdr)

> >

> > + if lz_hdr.signature == b"LZDM" or lz_hdr.compressed_len == 0:

> >

> > + fo = open(out_file, 'wb')

> >

> > + fo.write(di[offset:offset + lz_hdr.compressed_len])

> >

> > + fo.close()

> >

> > + return

> >

> > +

> >

> > + temp = os.path.splitext(out_file)[0] + '.tmp'

> >

> > + if lz_hdr.signature == b"LZMA":

> >

> > + alg = "Lzma"

> >

> > + elif lz_hdr.signature == b"LZ4 ":

> >

> > + alg = "Lz4"

> >

> > + else:

> >

> > + raise Exception("Unsupported compression '%s' !" % lz_hdr.signature)

> >

> > +

> >

> > + fo = open(temp, 'wb')

> >

> > + fo.write(di[offset:offset + lz_hdr.compressed_len])

> >

> > + fo.close()

> >

> > +

> >

> > + compress_tool = "%sCompress" % alg

> >

> > + if alg == "Lz4":

> >

> > + try:

> >

> > + cmdline = [

> >

> > + os.path.join(tool_dir, compress_tool),

> >

> > + "-d",

> >

> > + "-o", out_file,

> >

> > + temp]

> >

> > + run_process(cmdline, False, True)

> >

> > + except Exception:

> >

> > + msg_string = "Could not find/use CompressLz4 tool, " \

> >

> > + "trying with python lz4..."

> >

> > + print(msg_string)

> >

> > + try:

> >

> > + import lz4.block

> >

> > + if lz4.VERSION != '3.1.1':

> >

> > + msg_string = "Recommended lz4 module version " \

> >

> > + "is '3.1.1'," + lz4.VERSION \

> >

> > + + " is currently installed."

> >

> > + print(msg_string)

> >

> > + except ImportError:

> >

> > + msg_string = "Could not import lz4, use " \

> >

> > + "'python -m pip install lz4==3.1.1' " \

> >

> > + "to install it."

> >

> > + print(msg_string)

> >

> > + exit(1)

> >

> > + decompress_data = lz4.block.decompress(get_file_data(temp))

> >

> > + with open(out_file, "wb") as lz4bin:

> >

> > + lz4bin.write(decompress_data)

> >

> > + else:

> >

> > + cmdline = [

> >

> > + os.path.join(tool_dir, compress_tool),

> >

> > + "-d",

> >

> > + "-o", out_file,

> >

> > + temp]

> >

> > + run_process(cmdline, False, True)

> >

> > + os.remove(temp)

> >

> > +

> >

> > +

> >

> > +def compress(in_file, alg, svn=0, out_path='', tool_dir=''):

> >

> > + if not os.path.isfile(in_file):

> >

> > + raise Exception("Invalid input file '%s' !" % in_file)

> >

> > +

> >

> > + basename, ext = os.path.splitext(os.path.basename(in_file))

> >

> > + if out_path:

> >

> > + if os.path.isdir(out_path):

> >

> > + out_file = os.path.join(out_path, basename + '.lz')

> >

> > + else:

> >

> > + out_file = os.path.join(out_path)

> >

> > + else:

> >

> > + out_file = os.path.splitext(in_file)[0] + '.lz'

> >

> > +

> >

> > + if alg == "Lzma":

> >

> > + sig = "LZMA"

> >

> > + elif alg == "Tiano":

> >

> > + sig = "LZUF"

> >

> > + elif alg == "Lz4":

> >

> > + sig = "LZ4 "

> >

> > + elif alg == "Dummy":

> >

> > + sig = "LZDM"

> >

> > + else:

> >

> > + raise Exception("Unsupported compression '%s' !" % alg)

> >

> > +

> >

> > + in_len = os.path.getsize(in_file)

> >

> > + if in_len > 0:

> >

> > + compress_tool = "%sCompress" % alg

> >

> > + if sig == "LZDM":

> >

> > + shutil.copy(in_file, out_file)

> >

> > + compress_data = get_file_data(out_file)

> >

> > + elif sig == "LZ4 ":

> >

> > + try:

> >

> > + cmdline = [

> >

> > + os.path.join(tool_dir, compress_tool),

> >

> > + "-e",

> >

> > + "-o", out_file,

> >

> > + in_file]

> >

> > + run_process(cmdline, False, True)

> >

> > + compress_data = get_file_data(out_file)

> >

> > + except Exception:

> >

> > + msg_string = "Could not find/use CompressLz4 tool, " \

> >

> > + "trying with python lz4..."

> >

> > + print(msg_string)

> >

> > + try:

> >

> > + import lz4.block

> >

> > + if lz4.VERSION != '3.1.1':

> >

> > + msg_string = "Recommended lz4 module version " \

> >

> > + "is '3.1.1', " + lz4.VERSION \

> >

> > + + " is currently installed."

> >

> > + print(msg_string)

> >

> > + except ImportError:

> >

> > + msg_string = "Could not import lz4, use " \

> >

> > + "'python -m pip install lz4==3.1.1' " \

> >

> > + "to install it."

> >

> > + print(msg_string)

> >

> > + exit(1)

> >

> > + compress_data = lz4.block.compress(

> >

> > + get_file_data(in_file),

> >

> > + mode='high_compression')

> >

> > + elif sig == "LZMA":

> >

> > + cmdline = [

> >

> > + os.path.join(tool_dir, compress_tool),

> >

> > + "-e",

> >

> > + "-o", out_file,

> >

> > + in_file]

> >

> > + run_process(cmdline, False, True)

> >

> > + compress_data = get_file_data(out_file)

> >

> > + else:

> >

> > + compress_data = bytearray()

> >

> > +

> >

> > + lz_hdr = LZ_HEADER()

> >

> > + lz_hdr.signature = sig.encode()

> >

> > + lz_hdr.svn = svn

> >

> > + lz_hdr.compressed_len = len(compress_data)

> >

> > + lz_hdr.length = os.path.getsize(in_file)

> >

> > + data = bytearray()

> >

> > + data.extend(lz_hdr)

> >

> > + data.extend(compress_data)

> >

> > + gen_file_from_object(out_file, data)

> >

> > +

> >

> > + return out_file

> >

> > diff --git a/IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py

> > b/IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py

> > new file mode 100644

> > index 0000000000..a7f79bbc96

> > --- /dev/null

> > +++ b/IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py

> > @@ -0,0 +1,1499 @@

> > +# @ ConfigEditor.py

> >

> > +#

> >

> > +# Copyright(c) 2018 - 2021, Intel Corporation. All rights reserved.<BR>

> >

> > +# SPDX-License-Identifier: BSD-2-Clause-Patent

> >

> > +#

> >

> > +##

> >

> > +

> >

> > +import os

> >

> > +import sys

> >

> > +import marshal

> >

> > +import tkinter

> >

> > +import tkinter.ttk as ttk

> >

> > +import tkinter.messagebox as messagebox

> >

> > +import tkinter.filedialog as filedialog

> >

> > +

> >

> > +from pathlib import Path

> >

> > +from GenYamlCfg import CGenYamlCfg, bytes_to_value, \

> >

> > + bytes_to_bracket_str, value_to_bytes, array_str_to_value

> >

> > +from ctypes import sizeof, Structure, ARRAY, c_uint8, c_uint64, c_char, \

> >

> > + c_uint32, c_uint16

> >

> > +from functools import reduce

> >

> > +

> >

> > +sys.path.insert(0, '..')

> >

> > +from FspDscBsf2Yaml import bsf_to_dsc, dsc_to_yaml # noqa

> >

> > +

> >

> > +

> >

> > +sys.dont_write_bytecode = True

> >

> > +

> >

> > +

> >

> > +class create_tool_tip(object):

> >

> > + '''

> >

> > + create a tooltip for a given widget

> >

> > + '''

> >

> > + in_progress = False

> >

> > +

> >

> > + def __init__(self, widget, text=''):

> >

> > + self.top_win = None

> >

> > + self.widget = widget

> >

> > + self.text = text

> >

> > + self.widget.bind("<Enter>", self.enter)

> >

> > + self.widget.bind("<Leave>", self.leave)

> >

> > +

> >

> > + def enter(self, event=None):

> >

> > + if self.in_progress:

> >

> > + return

> >

> > + if self.widget.winfo_class() == 'Treeview':

> >

> > + # Only show help when cursor is on row header.

> >

> > + rowid = self.widget.identify_row(event.y)

> >

> > + if rowid != '':

> >

> > + return

> >

> > + else:

> >

> > + x, y, cx, cy = self.widget.bbox("insert")

> >

> > +

> >

> > + cursor = self.widget.winfo_pointerxy()

> >

> > + x = self.widget.winfo_rootx() + 35

> >

> > + y = self.widget.winfo_rooty() + 20

> >

> > + if cursor[1] > y and cursor[1] < y + 20:

> >

> > + y += 20

> >

> > +

> >

> > + # creates a toplevel window

> >

> > + self.top_win = tkinter.Toplevel(self.widget)

> >

> > + # Leaves only the label and removes the app window

> >

> > + self.top_win.wm_overrideredirect(True)

> >

> > + self.top_win.wm_geometry("+%d+%d" % (x, y))

> >

> > + label = tkinter.Message(self.top_win,

> >

> > + text=self.text,

> >

> > + justify='left',

> >

> > + background='bisque',

> >

> > + relief='solid',

> >

> > + borderwidth=1,

> >

> > + font=("times", "10", "normal"))

> >

> > + label.pack(ipadx=1)

> >

> > + self.in_progress = True

> >

> > +

> >

> > + def leave(self, event=None):

> >

> > + if self.top_win:

> >

> > + self.top_win.destroy()

> >

> > + self.in_progress = False

> >

> > +

> >

> > +

> >

> > +class validating_entry(tkinter.Entry):

> >

> > + def __init__(self, master, **kw):

> >

> > + tkinter.Entry.__init__(*(self, master), **kw)

> >

> > + self.parent = master

> >

> > + self.old_value = ''

> >

> > + self.last_value = ''

> >

> > + self.variable = tkinter.StringVar()

> >

> > + self.variable.trace("w", self.callback)

> >

> > + self.config(textvariable=self.variable)

> >

> > + self.config({"background": "#c0c0c0"})

> >

> > + self.bind("<Return>", self.move_next)

> >

> > + self.bind("<Tab>", self.move_next)

> >

> > + self.bind("<Escape>", self.cancel)

> >

> > + for each in ['BackSpace', 'Delete']:

> >

> > + self.bind("<%s>" % each, self.ignore)

> >

> > + self.display(None)

> >

> > +

> >

> > + def ignore(self, even):

> >

> > + return "break"

> >

> > +

> >

> > + def move_next(self, event):

> >

> > + if self.row < 0:

> >

> > + return

> >

> > + row, col = self.row, self.col

> >

> > + txt, row_id, col_id = self.parent.get_next_cell(row, col)

> >

> > + self.display(txt, row_id, col_id)

> >

> > + return "break"

> >

> > +

> >

> > + def cancel(self, event):

> >

> > + self.variable.set(self.old_value)

> >

> > + self.display(None)

> >

> > +

> >

> > + def display(self, txt, row_id='', col_id=''):

> >

> > + if txt is None:

> >

> > + self.row = -1

> >

> > + self.col = -1

> >

> > + self.place_forget()

> >

> > + else:

> >

> > + row = int('0x' + row_id[1:], 0) - 1

> >

> > + col = int(col_id[1:]) - 1

> >

> > + self.row = row

> >

> > + self.col = col

> >

> > + self.old_value = txt

> >

> > + self.last_value = txt

> >

> > + x, y, width, height = self.parent.bbox(row_id, col)

> >

> > + self.place(x=x, y=y, w=width)

> >

> > + self.variable.set(txt)

> >

> > + self.focus_set()

> >

> > + self.icursor(0)

> >

> > +

> >

> > + def callback(self, *Args):

> >

> > + cur_val = self.variable.get()

> >

> > + new_val = self.validate(cur_val)

> >

> > + if new_val is not None and self.row >= 0:

> >

> > + self.last_value = new_val

> >

> > + self.parent.set_cell(self.row, self.col, new_val)

> >

> > + self.variable.set(self.last_value)

> >

> > +

> >

> > + def validate(self, value):

> >

> > + if len(value) > 0:

> >

> > + try:

> >

> > + int(value, 16)

> >

> > + except Exception:

> >

> > + return None

> >

> > +

> >

> > + # Normalize the cell format

> >

> > + self.update()

> >

> > + cell_width = self.winfo_width()

> >

> > + max_len = custom_table.to_byte_length(cell_width) * 2

> >

> > + cur_pos = self.index("insert")

> >

> > + if cur_pos == max_len + 1:

> >

> > + value = value[-max_len:]

> >

> > + else:

> >

> > + value = value[:max_len]

> >

> > + if value == '':

> >

> > + value = '0'

> >

> > + fmt = '%%0%dX' % max_len

> >

> > + return fmt % int(value, 16)

> >

> > +

> >

> > +

> >

> > +class custom_table(ttk.Treeview):

> >

> > + _Padding = 20

> >

> > + _Char_width = 6

> >

> > +

> >

> > + def __init__(self, parent, col_hdr, bins):

> >

> > + cols = len(col_hdr)

> >

> > +

> >

> > + col_byte_len = []

> >

> > + for col in range(cols): # Columns

> >

> > + col_byte_len.append(int(col_hdr[col].split(':')[1]))

> >

> > +

> >

> > + byte_len = sum(col_byte_len)

> >

> > + rows = (len(bins) + byte_len - 1) // byte_len

> >

> > +

> >

> > + self.rows = rows

> >

> > + self.cols = cols

> >

> > + self.col_byte_len = col_byte_len

> >

> > + self.col_hdr = col_hdr

> >

> > +

> >

> > + self.size = len(bins)

> >

> > + self.last_dir = ''

> >

> > +

> >

> > + style = ttk.Style()

> >

> > + style.configure("Custom.Treeview.Heading",

> >

> > + font=('calibri', 10, 'bold'),

> >

> > + foreground="blue")

> >

> > + ttk.Treeview.__init__(self, parent, height=rows,

> >

> > + columns=[''] + col_hdr, show='headings',

> >

> > + style="Custom.Treeview",

> >

> > + selectmode='none')

> >

> > + self.bind("<Button-1>", self.click)

> >

> > + self.bind("<FocusOut>", self.focus_out)

> >

> > + self.entry = validating_entry(self, width=4, justify=tkinter.CENTER)

> >

> > +

> >

> > + self.heading(0, text='LOAD')

> >

> > + self.column(0, width=60, stretch=0, anchor=tkinter.CENTER)

> >

> > +

> >

> > + for col in range(cols): # Columns

> >

> > + text = col_hdr[col].split(':')[0]

> >

> > + byte_len = int(col_hdr[col].split(':')[1])

> >

> > + self.heading(col+1, text=text)

> >

> > + self.column(col+1, width=self.to_cell_width(byte_len),

> >

> > + stretch=0, anchor=tkinter.CENTER)

> >

> > + idx = 0

> >

> > + for row in range(rows): # Rows

> >

> > + text = '%04X' % (row * len(col_hdr))

> >

> > + vals = ['%04X:' % (cols * row)]

> >

> > + for col in range(cols): # Columns

> >

> > + if idx >= len(bins):

> >

> > + break

> >

> > + byte_len = int(col_hdr[col].split(':')[1])

> >

> > + value = bytes_to_value(bins[idx:idx+byte_len])

> >

> > + hex = ("%%0%dX" % (byte_len * 2)) % value

> >

> > + vals.append(hex)

> >

> > + idx += byte_len

> >

> > + self.insert('', 'end', values=tuple(vals))

> >

> > + if idx >= len(bins):

> >

> > + break

> >

> > +

> >

> > + @staticmethod

> >

> > + def to_cell_width(byte_len):

> >

> > + return byte_len * 2 * custom_table._Char_width +

> custom_table._Padding

> >

> > +

> >

> > + @staticmethod

> >

> > + def to_byte_length(cell_width):

> >

> > + return(cell_width - custom_table._Padding) \

> >

> > + // (2 * custom_table._Char_width)

> >

> > +

> >

> > + def focus_out(self, event):

> >

> > + self.entry.display(None)

> >

> > +

> >

> > + def refresh_bin(self, bins):

> >

> > + if not bins:

> >

> > + return

> >

> > +

> >

> > + # Reload binary into widget

> >

> > + bin_len = len(bins)

> >

> > + for row in range(self.rows):

> >

> > + iid = self.get_children()[row]

> >

> > + for col in range(self.cols):

> >

> > + idx = row * sum(self.col_byte_len) + \

> >

> > + sum(self.col_byte_len[:col])

> >

> > + byte_len = self.col_byte_len[col]

> >

> > + if idx + byte_len <= self.size:

> >

> > + byte_len = int(self.col_hdr[col].split(':')[1])

> >

> > + if idx + byte_len > bin_len:

> >

> > + val = 0

> >

> > + else:

> >

> > + val = bytes_to_value(bins[idx:idx+byte_len])

> >

> > + hex_val = ("%%0%dX" % (byte_len * 2)) % val

> >

> > + self.set(iid, col + 1, hex_val)

> >

> > +

> >

> > + def get_cell(self, row, col):

> >

> > + iid = self.get_children()[row]

> >

> > + txt = self.item(iid, 'values')[col]

> >

> > + return txt

> >

> > +

> >

> > + def get_next_cell(self, row, col):

> >

> > + rows = self.get_children()

> >

> > + col += 1

> >

> > + if col > self.cols:

> >

> > + col = 1

> >

> > + row += 1

> >

> > + cnt = row * sum(self.col_byte_len) + sum(self.col_byte_len[:col])

> >

> > + if cnt > self.size:

> >

> > + # Reached the last cell, so roll back to beginning

> >

> > + row = 0

> >

> > + col = 1

> >

> > +

> >

> > + txt = self.get_cell(row, col)

> >

> > + row_id = rows[row]

> >

> > + col_id = '#%d' % (col + 1)

> >

> > + return(txt, row_id, col_id)

> >

> > +

> >

> > + def set_cell(self, row, col, val):

> >

> > + iid = self.get_children()[row]

> >

> > + self.set(iid, col, val)

> >

> > +

> >

> > + def load_bin(self):

> >

> > + # Load binary from file

> >

> > + path = filedialog.askopenfilename(

> >

> > + initialdir=self.last_dir,

> >

> > + title="Load binary file",

> >

> > + filetypes=(("Binary files", "*.bin"), (

> >

> > + "binary files", "*.bin")))

> >

> > + if path:

> >

> > + self.last_dir = os.path.dirname(path)

> >

> > + fd = open(path, 'rb')

> >

> > + bins = bytearray(fd.read())[:self.size]

> >

> > + fd.close()

> >

> > + bins.extend(b'\x00' * (self.size - len(bins)))

> >

> > + return bins

> >

> > +

> >

> > + return None

> >

> > +

> >

> > + def click(self, event):

> >

> > + row_id = self.identify_row(event.y)

> >

> > + col_id = self.identify_column(event.x)

> >

> > + if row_id == '' and col_id == '#1':

> >

> > + # Clicked on "LOAD" cell

> >

> > + bins = self.load_bin()

> >

> > + self.refresh_bin(bins)

> >

> > + return

> >

> > +

> >

> > + if col_id == '#1':

> >

> > + # Clicked on column 1(Offset column)

> >

> > + return

> >

> > +

> >

> > + item = self.identify('item', event.x, event.y)

> >

> > + if not item or not col_id:

> >

> > + # Not clicked on valid cell

> >

> > + return

> >

> > +

> >

> > + # Clicked cell

> >

> > + row = int('0x' + row_id[1:], 0) - 1

> >

> > + col = int(col_id[1:]) - 1

> >

> > + if row * self.cols + col > self.size:

> >

> > + return

> >

> > +

> >

> > + vals = self.item(item, 'values')

> >

> > + if col < len(vals):

> >

> > + txt = self.item(item, 'values')[col]

> >

> > + self.entry.display(txt, row_id, col_id)

> >

> > +

> >

> > + def get(self):

> >

> > + bins = bytearray()

> >

> > + row_ids = self.get_children()

> >

> > + for row_id in row_ids:

> >

> > + row = int('0x' + row_id[1:], 0) - 1

> >

> > + for col in range(self.cols):

> >

> > + idx = row * sum(self.col_byte_len) + \

> >

> > + sum(self.col_byte_len[:col])

> >

> > + byte_len = self.col_byte_len[col]

> >

> > + if idx + byte_len > self.size:

> >

> > + break

> >

> > + hex = self.item(row_id, 'values')[col + 1]

> >

> > + values = value_to_bytes(int(hex, 16)

> >

> > + & ((1 << byte_len * 8) - 1), byte_len)

> >

> > + bins.extend(values)

> >

> > + return bins

> >

> > +

> >

> > +

> >

> > +class c_uint24(Structure):

> >

> > + """Little-Endian 24-bit Unsigned Integer"""

> >

> > + _pack_ = 1

> >

> > + _fields_ = [('Data', (c_uint8 * 3))]

> >

> > +

> >

> > + def __init__(self, val=0):

> >

> > + self.set_value(val)

> >

> > +

> >

> > + def __str__(self, indent=0):

> >

> > + return '0x%.6x' % self.value

> >

> > +

> >

> > + def __int__(self):

> >

> > + return self.get_value()

> >

> > +

> >

> > + def set_value(self, val):

> >

> > + self.Data[0:3] = Val2Bytes(val, 3)

> >

> > +

> >

> > + def get_value(self):

> >

> > + return Bytes2Val(self.Data[0:3])

> >

> > +

> >

> > + value = property(get_value, set_value)

> >

> > +

> >

> > +

> >

> > +class EFI_FIRMWARE_VOLUME_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('ZeroVector', ARRAY(c_uint8, 16)),

> >

> > + ('FileSystemGuid', ARRAY(c_uint8, 16)),

> >

> > + ('FvLength', c_uint64),

> >

> > + ('Signature', ARRAY(c_char, 4)),

> >

> > + ('Attributes', c_uint32),

> >

> > + ('HeaderLength', c_uint16),

> >

> > + ('Checksum', c_uint16),

> >

> > + ('ExtHeaderOffset', c_uint16),

> >

> > + ('Reserved', c_uint8),

> >

> > + ('Revision', c_uint8)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class EFI_FIRMWARE_VOLUME_EXT_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('FvName', ARRAY(c_uint8, 16)),

> >

> > + ('ExtHeaderSize', c_uint32)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class EFI_FFS_INTEGRITY_CHECK(Structure):

> >

> > + _fields_ = [

> >

> > + ('Header', c_uint8),

> >

> > + ('File', c_uint8)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class EFI_FFS_FILE_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('Name', ARRAY(c_uint8, 16)),

> >

> > + ('IntegrityCheck', EFI_FFS_INTEGRITY_CHECK),

> >

> > + ('Type', c_uint8),

> >

> > + ('Attributes', c_uint8),

> >

> > + ('Size', c_uint24),

> >

> > + ('State', c_uint8)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class EFI_COMMON_SECTION_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('Size', c_uint24),

> >

> > + ('Type', c_uint8)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class EFI_SECTION_TYPE:

> >

> > + """Enumeration of all valid firmware file section types."""

> >

> > + ALL = 0x00

> >

> > + COMPRESSION = 0x01

> >

> > + GUID_DEFINED = 0x02

> >

> > + DISPOSABLE = 0x03

> >

> > + PE32 = 0x10

> >

> > + PIC = 0x11

> >

> > + TE = 0x12

> >

> > + DXE_DEPEX = 0x13

> >

> > + VERSION = 0x14

> >

> > + USER_INTERFACE = 0x15

> >

> > + COMPATIBILITY16 = 0x16

> >

> > + FIRMWARE_VOLUME_IMAGE = 0x17

> >

> > + FREEFORM_SUBTYPE_GUID = 0x18

> >

> > + RAW = 0x19

> >

> > + PEI_DEPEX = 0x1b

> >

> > + SMM_DEPEX = 0x1c

> >

> > +

> >

> > +

> >

> > +class FSP_COMMON_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('Signature', ARRAY(c_char, 4)),

> >

> > + ('HeaderLength', c_uint32)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class FSP_INFORMATION_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('Signature', ARRAY(c_char, 4)),

> >

> > + ('HeaderLength', c_uint32),

> >

> > + ('Reserved1', c_uint16),

> >

> > + ('SpecVersion', c_uint8),

> >

> > + ('HeaderRevision', c_uint8),

> >

> > + ('ImageRevision', c_uint32),

> >

> > + ('ImageId', ARRAY(c_char, 8)),

> >

> > + ('ImageSize', c_uint32),

> >

> > + ('ImageBase', c_uint32),

> >

> > + ('ImageAttribute', c_uint16),

> >

> > + ('ComponentAttribute', c_uint16),

> >

> > + ('CfgRegionOffset', c_uint32),

> >

> > + ('CfgRegionSize', c_uint32),

> >

> > + ('Reserved2', c_uint32),

> >

> > + ('TempRamInitEntryOffset', c_uint32),

> >

> > + ('Reserved3', c_uint32),

> >

> > + ('NotifyPhaseEntryOffset', c_uint32),

> >

> > + ('FspMemoryInitEntryOffset', c_uint32),

> >

> > + ('TempRamExitEntryOffset', c_uint32),

> >

> > + ('FspSiliconInitEntryOffset', c_uint32)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class FSP_EXTENDED_HEADER(Structure):

> >

> > + _fields_ = [

> >

> > + ('Signature', ARRAY(c_char, 4)),

> >

> > + ('HeaderLength', c_uint32),

> >

> > + ('Revision', c_uint8),

> >

> > + ('Reserved', c_uint8),

> >

> > + ('FspProducerId', ARRAY(c_char, 6)),

> >

> > + ('FspProducerRevision', c_uint32),

> >

> > + ('FspProducerDataSize', c_uint32)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class FSP_PATCH_TABLE(Structure):

> >

> > + _fields_ = [

> >

> > + ('Signature', ARRAY(c_char, 4)),

> >

> > + ('HeaderLength', c_uint16),

> >

> > + ('HeaderRevision', c_uint8),

> >

> > + ('Reserved', c_uint8),

> >

> > + ('PatchEntryNum', c_uint32)

> >

> > + ]

> >

> > +

> >

> > +

> >

> > +class Section:

> >

> > + def __init__(self, offset, secdata):

> >

> > + self.SecHdr = EFI_COMMON_SECTION_HEADER.from_buffer(secdata, 0)

> >

> > + self.SecData = secdata[0:int(self.SecHdr.Size)]

> >

> > + self.Offset = offset

> >

> > +

> >

> > +

> >

> > +def AlignPtr(offset, alignment=8):

> >

> > + return (offset + alignment - 1) & ~(alignment - 1)

> >

> > +

> >

> > +

> >

> > +def Bytes2Val(bytes):

> >

> > + return reduce(lambda x, y: (x << 8) | y, bytes[:: -1])

> >

> > +

> >

> > +

> >

> > +def Val2Bytes(value, blen):

> >

> > + return [(value >> (i*8) & 0xff) for i in range(blen)]

> >

> > +

> >

> > +

> >

> > +class FirmwareFile:

> >

> > + def __init__(self, offset, filedata):

> >

> > + self.FfsHdr = EFI_FFS_FILE_HEADER.from_buffer(filedata, 0)

> >

> > + self.FfsData = filedata[0:int(self.FfsHdr.Size)]

> >

> > + self.Offset = offset

> >

> > + self.SecList = []

> >

> > +

> >

> > + def ParseFfs(self):

> >

> > + ffssize = len(self.FfsData)

> >

> > + offset = sizeof(self.FfsHdr)

> >

> > + if self.FfsHdr.Name != '\xff' * 16:

> >

> > + while offset < (ffssize - sizeof(EFI_COMMON_SECTION_HEADER)):

> >

> > + sechdr = EFI_COMMON_SECTION_HEADER.from_buffer(

> >

> > + self.FfsData, offset)

> >

> > + sec = Section(

> >

> > + offset, self.FfsData[offset:offset + int(sechdr.Size)])

> >

> > + self.SecList.append(sec)

> >

> > + offset += int(sechdr.Size)

> >

> > + offset = AlignPtr(offset, 4)

> >

> > +

> >

> > +

> >

> > +class FirmwareVolume:

> >

> > + def __init__(self, offset, fvdata):

> >

> > + self.FvHdr = EFI_FIRMWARE_VOLUME_HEADER.from_buffer(fvdata, 0)

> >

> > + self.FvData = fvdata[0: self.FvHdr.FvLength]

> >

> > + self.Offset = offset

> >

> > + if self.FvHdr.ExtHeaderOffset > 0:

> >

> > + self.FvExtHdr = EFI_FIRMWARE_VOLUME_EXT_HEADER.from_buffer(

> >

> > + self.FvData, self.FvHdr.ExtHeaderOffset)

> >

> > + else:

> >

> > + self.FvExtHdr = None

> >

> > + self.FfsList = []

> >

> > +

> >

> > + def ParseFv(self):

> >

> > + fvsize = len(self.FvData)

> >

> > + if self.FvExtHdr:

> >

> > + offset = self.FvHdr.ExtHeaderOffset + self.FvExtHdr.ExtHeaderSize

> >

> > + else:

> >

> > + offset = self.FvHdr.HeaderLength

> >

> > + offset = AlignPtr(offset)

> >

> > + while offset < (fvsize - sizeof(EFI_FFS_FILE_HEADER)):

> >

> > + ffshdr = EFI_FFS_FILE_HEADER.from_buffer(self.FvData, offset)

> >

> > + if (ffshdr.Name == '\xff' * 16) and \

> >

> > + (int(ffshdr.Size) == 0xFFFFFF):

> >

> > + offset = fvsize

> >

> > + else:

> >

> > + ffs = FirmwareFile(

> >

> > + offset, self.FvData[offset:offset + int(ffshdr.Size)])

> >

> > + ffs.ParseFfs()

> >

> > + self.FfsList.append(ffs)

> >

> > + offset += int(ffshdr.Size)

> >

> > + offset = AlignPtr(offset)

> >

> > +

> >

> > +

> >

> > +class FspImage:

> >

> > + def __init__(self, offset, fih, fihoff, patch):

> >

> > + self.Fih = fih

> >

> > + self.FihOffset = fihoff

> >

> > + self.Offset = offset

> >

> > + self.FvIdxList = []

> >

> > + self.Type = "XTMSXXXXOXXXXXXX"[(fih.ComponentAttribute >> 12) &

> 0x0F]

> >

> > + self.PatchList = patch

> >

> > + self.PatchList.append(fihoff + 0x1C)

> >

> > +

> >

> > + def AppendFv(self, FvIdx):

> >

> > + self.FvIdxList.append(FvIdx)

> >

> > +

> >

> > + def Patch(self, delta, fdbin):

> >

> > + count = 0

> >

> > + applied = 0

> >

> > + for idx, patch in enumerate(self.PatchList):

> >

> > + ptype = (patch >> 24) & 0x0F

> >

> > + if ptype not in [0x00, 0x0F]:

> >

> > + raise Exception('ERROR: Invalid patch type %d !' % ptype)

> >

> > + if patch & 0x80000000:

> >

> > + patch = self.Fih.ImageSize - (0x1000000 - (patch & 0xFFFFFF))

> >

> > + else:

> >

> > + patch = patch & 0xFFFFFF

> >

> > + if (patch < self.Fih.ImageSize) and \

> >

> > + (patch + sizeof(c_uint32) <= self.Fih.ImageSize):

> >

> > + offset = patch + self.Offset

> >

> > + value = Bytes2Val(fdbin[offset:offset+sizeof(c_uint32)])

> >

> > + value += delta

> >

> > + fdbin[offset:offset+sizeof(c_uint32)] = Val2Bytes(

> >

> > + value, sizeof(c_uint32))

> >

> > + applied += 1

> >

> > + count += 1

> >

> > + # Don't count the FSP base address patch entry appended at the end

> >

> > + if count != 0:

> >

> > + count -= 1

> >

> > + applied -= 1

> >

> > + return (count, applied)

> >

> > +

> >

> > +

> >

> > +class FirmwareDevice:

> >

> > + def __init__(self, offset, FdData):

> >

> > + self.FvList = []

> >

> > + self.FspList = []

> >

> > + self.FspExtList = []

> >

> > + self.FihList = []

> >

> > + self.BuildList = []

> >

> > + self.OutputText = ""

> >

> > + self.Offset = 0

> >

> > + self.FdData = FdData

> >

> > +

> >

> > + def ParseFd(self):

> >

> > + offset = 0

> >

> > + fdsize = len(self.FdData)

> >

> > + self.FvList = []

> >

> > + while offset < (fdsize - sizeof(EFI_FIRMWARE_VOLUME_HEADER)):

> >

> > + fvh = EFI_FIRMWARE_VOLUME_HEADER.from_buffer(self.FdData,

> > offset)

> >

> > + if b'_FVH' != fvh.Signature:

> >

> > + raise Exception("ERROR: Invalid FV header !")

> >

> > + fv = FirmwareVolume(

> >

> > + offset, self.FdData[offset:offset + fvh.FvLength])

> >

> > + fv.ParseFv()

> >

> > + self.FvList.append(fv)

> >

> > + offset += fv.FvHdr.FvLength

> >

> > +

> >

> > + def CheckFsp(self):

> >

> > + if len(self.FspList) == 0:

> >

> > + return

> >

> > +

> >

> > + fih = None

> >

> > + for fsp in self.FspList:

> >

> > + if not fih:

> >

> > + fih = fsp.Fih

> >

> > + else:

> >

> > + newfih = fsp.Fih

> >

> > + if (newfih.ImageId != fih.ImageId) or \

> >

> > + (newfih.ImageRevision != fih.ImageRevision):

> >

> > + raise Exception(

> >

> > + "ERROR: Inconsistent FSP ImageId or "

> >

> > + "ImageRevision detected !")

> >

> > +

> >

> > + def ParseFsp(self):

> >

> > + flen = 0

> >

> > + for idx, fv in enumerate(self.FvList):

> >

> > + # Check if this FV contains FSP header

> >

> > + if flen == 0:

> >

> > + if len(fv.FfsList) == 0:

> >

> > + continue

> >

> > + ffs = fv.FfsList[0]

> >

> > + if len(ffs.SecList) == 0:

> >

> > + continue

> >

> > + sec = ffs.SecList[0]

> >

> > + if sec.SecHdr.Type != EFI_SECTION_TYPE.RAW:

> >

> > + continue

> >

> > + fihoffset = ffs.Offset + sec.Offset + sizeof(sec.SecHdr)

> >

> > + fspoffset = fv.Offset

> >

> > + offset = fspoffset + fihoffset

> >

> > + fih = FSP_INFORMATION_HEADER.from_buffer(self.FdData, offset)

> >

> > + self.FihList.append(fih)

> >

> > + if b'FSPH' != fih.Signature:

> >

> > + continue

> >

> > +

> >

> > + offset += fih.HeaderLength

> >

> > +

> >

> > + offset = AlignPtr(offset, 2)

> >

> > + Extfih = FSP_EXTENDED_HEADER.from_buffer(self.FdData, offset)

> >

> > + self.FspExtList.append(Extfih)

> >

> > + offset = AlignPtr(offset, 4)

> >

> > + plist = []

> >

> > + while True:

> >

> > + fch = FSP_COMMON_HEADER.from_buffer(self.FdData, offset)

> >

> > + if b'FSPP' != fch.Signature:

> >

> > + offset += fch.HeaderLength

> >

> > + offset = AlignPtr(offset, 4)

> >

> > + else:

> >

> > + fspp = FSP_PATCH_TABLE.from_buffer(

> >

> > + self.FdData, offset)

> >

> > + offset += sizeof(fspp)

> >

> > + start_offset = offset + 32

> >

> > + end_offset = offset + 32

> >

> > + while True:

> >

> > + end_offset += 1

> >

> > + if(self.FdData[

> >

> > + end_offset: end_offset + 1] == b'\xff'):

> >

> > + break

> >

> > + self.BuildList.append(

> >

> > + self.FdData[start_offset:end_offset])

> >

> > + pdata = (c_uint32 * fspp.PatchEntryNum).from_buffer(

> >

> > + self.FdData, offset)

> >

> > + plist = list(pdata)

> >

> > + break

> >

> > +

> >

> > + fsp = FspImage(fspoffset, fih, fihoffset, plist)

> >

> > + fsp.AppendFv(idx)

> >

> > + self.FspList.append(fsp)

> >

> > + flen = fsp.Fih.ImageSize - fv.FvHdr.FvLength

> >

> > + else:

> >

> > + fsp.AppendFv(idx)

> >

> > + flen -= fv.FvHdr.FvLength

> >

> > + if flen < 0:

> >

> > + raise Exception("ERROR: Incorrect FV size in image !")

> >

> > + self.CheckFsp()

> >

> > +

> >

> > + def OutputFsp(self):

> >

> > + def copy_text_to_clipboard():

> >

> > + window.clipboard_clear()

> >

> > + window.clipboard_append(self.OutputText)

> >

> > +

> >

> > + window = tkinter.Tk()

> >

> > + window.title("Fsp Headers")

> >

> > + window.resizable(0, 0)

> >

> > + # Window Size

> >

> > + window.geometry("300x400+350+150")

> >

> > + frame = tkinter.Frame(window)

> >

> > + frame.pack(side=tkinter.BOTTOM)

> >

> > + # Vertical (y) Scroll Bar

> >

> > + scroll = tkinter.Scrollbar(window)

> >

> > + scroll.pack(side=tkinter.RIGHT, fill=tkinter.Y)

> >

> > + text = tkinter.Text(window,

> >

> > + wrap=tkinter.NONE, yscrollcommand=scroll.set)

> >

> > + i = 0

> >

> > + self.OutputText = self.OutputText + "Fsp Header Details \n\n"

> >

> > + while i < len(self.FihList):

> >

> > + try:

> >

> > + self.OutputText += str(self.BuildList[i].decode()) + "\n"

> >

> > + except Exception:

> >

> > + self.OutputText += "No description found\n"

> >

> > + self.OutputText += "FSP Header :\n "

> >

> > + self.OutputText += "Signature : " + \

> >

> > + str(self.FihList[i].Signature.decode('utf-8')) + "\n "

> >

> > + self.OutputText += "Header Length : " + \

> >

> > + str(hex(self.FihList[i].HeaderLength)) + "\n "

> >

> > + self.OutputText += "Header Revision : " + \

> >

> > + str(hex(self.FihList[i].HeaderRevision)) + "\n "

> >

> > + self.OutputText += "Spec Version : " + \

> >

> > + str(hex(self.FihList[i].SpecVersion)) + "\n "

> >

> > + self.OutputText += "Image Revision : " + \

> >

> > + str(hex(self.FihList[i].ImageRevision)) + "\n "

> >

> > + self.OutputText += "Image Id : " + \

> >

> > + str(self.FihList[i].ImageId.decode('utf-8')) + "\n "

> >

> > + self.OutputText += "Image Size : " + \

> >

> > + str(hex(self.FihList[i].ImageSize)) + "\n "

> >

> > + self.OutputText += "Image Base : " + \

> >

> > + str(hex(self.FihList[i].ImageBase)) + "\n "

> >

> > + self.OutputText += "Image Attribute : " + \

> >

> > + str(hex(self.FihList[i].ImageAttribute)) + "\n "

> >

> > + self.OutputText += "Cfg Region Offset : " + \

> >

> > + str(hex(self.FihList[i].CfgRegionOffset)) + "\n "

> >

> > + self.OutputText += "Cfg Region Size : " + \

> >

> > + str(hex(self.FihList[i].CfgRegionSize)) + "\n "

> >

> > + self.OutputText += "API Entry Num : " + \

> >

> > + str(hex(self.FihList[i].Reserved2)) + "\n "

> >

> > + self.OutputText += "Temp Ram Init Entry : " + \

> >

> > + str(hex(self.FihList[i].TempRamInitEntryOffset)) + "\n "

> >

> > + self.OutputText += "FSP Init Entry : " + \

> >

> > + str(hex(self.FihList[i].Reserved3)) + "\n "

> >

> > + self.OutputText += "Notify Phase Entry : " + \

> >

> > + str(hex(self.FihList[i].NotifyPhaseEntryOffset)) + "\n "

> >

> > + self.OutputText += "Fsp Memory Init Entry : " + \

> >

> > + str(hex(self.FihList[i].FspMemoryInitEntryOffset)) + "\n "

> >

> > + self.OutputText += "Temp Ram Exit Entry : " + \

> >

> > + str(hex(self.FihList[i].TempRamExitEntryOffset)) + "\n "

> >

> > + self.OutputText += "Fsp Silicon Init Entry : " + \

> >

> > + str(hex(self.FihList[i].FspSiliconInitEntryOffset)) + "\n\n"

> >

> > + self.OutputText += "FSP Extended Header:\n "

> >

> > + self.OutputText += "Signature : " + \

> >

> > + str(self.FspExtList[i].Signature.decode('utf-8')) + "\n "

> >

> > + self.OutputText += "Header Length : " + \

> >

> > + str(hex(self.FspExtList[i].HeaderLength)) + "\n "

> >

> > + self.OutputText += "Header Revision : " + \

> >

> > + str(hex(self.FspExtList[i].Revision)) + "\n "

> >

> > + self.OutputText += "Fsp Producer Id : " + \

> >

> > + str(self.FspExtList[i].FspProducerId.decode('utf-8')) + "\n "

> >

> > + self.OutputText += "FspProducerRevision : " + \

> >

> > + str(hex(self.FspExtList[i].FspProducerRevision)) + "\n\n"

> >

> > + i += 1

> >

> > + text.insert(tkinter.INSERT, self.OutputText)

> >

> > + text.pack()

> >

> > + # Configure the scrollbars

> >

> > + scroll.config(command=text.yview)

> >

> > + copy_button = tkinter.Button(

> >

> > + window, text="Copy to Clipboard", command=copy_text_to_clipboard)

> >

> > + copy_button.pack(in_=frame, side=tkinter.LEFT, padx=20, pady=10)

> >

> > + exit_button = tkinter.Button(

> >

> > + window, text="Close", command=window.destroy)

> >

> > + exit_button.pack(in_=frame, side=tkinter.RIGHT, padx=20, pady=10)

> >

> > + window.mainloop()

> >

> > +

> >

> > +

> >

> > +class state:

> >

> > + def __init__(self):

> >

> > + self.state = False

> >

> > +

> >

> > + def set(self, value):

> >

> > + self.state = value

> >

> > +

> >

> > + def get(self):

> >

> > + return self.state

> >

> > +

> >

> > +

> >

> > +class application(tkinter.Frame):

> >

> > + def __init__(self, master=None):

> >

> > + root = master

> >

> > +

> >

> > + self.debug = True

> >

> > + self.mode = 'FSP'

> >

> > + self.last_dir = '.'

> >

> > + self.page_id = ''

> >

> > + self.page_list = {}

> >

> > + self.conf_list = {}

> >

> > + self.cfg_data_obj = None

> >

> > + self.org_cfg_data_bin = None

> >

> > + self.in_left = state()

> >

> > + self.in_right = state()

> >

> > +

> >

> > + # Check if current directory contains a file with a .yaml extension

> >

> > + # if not default self.last_dir to a Platform directory where it is

> >

> > + # easier to locate *BoardPkg\CfgData\*Def.yaml files

> >

> > + self.last_dir = '.'

> >

> > + if not any(fname.endswith('.yaml') for fname in os.listdir('.')):

> >

> > + platform_path = Path(os.path.realpath(__file__)).parents[2].\

> >

> > + joinpath('Platform')

> >

> > + if platform_path.exists():

> >

> > + self.last_dir = platform_path

> >

> > +

> >

> > + tkinter.Frame.__init__(self, master, borderwidth=2)

> >

> > +

> >

> > + self.menu_string = [

> >

> > + 'Save Config Data to Binary', 'Load Config Data from Binary',

> >

> > + 'Show Binary Information',

> >

> > + 'Load Config Changes from Delta File',

> >

> > + 'Save Config Changes to Delta File',

> >

> > + 'Save Full Config Data to Delta File',

> >

> > + 'Open Config BSF file'

> >

> > + ]

> >

> > +

> >

> > + root.geometry("1200x800")

> >

> > +

> >

> > + paned = ttk.Panedwindow(root, orient=tkinter.HORIZONTAL)

> >

> > + paned.pack(fill=tkinter.BOTH, expand=True, padx=(4, 4))

> >

> > +

> >

> > + status = tkinter.Label(master, text="", bd=1, relief=tkinter.SUNKEN,

> >

> > + anchor=tkinter.W)

> >

> > + status.pack(side=tkinter.BOTTOM, fill=tkinter.X)

> >

> > +

> >

> > + frame_left = ttk.Frame(paned, height=800, relief="groove")

> >

> > +

> >

> > + self.left = ttk.Treeview(frame_left, show="tree")

> >

> > +

> >

> > + # Set up tree HScroller

> >

> > + pady = (10, 10)

> >

> > + self.tree_scroll = ttk.Scrollbar(frame_left,

> >

> > + orient="vertical",

> >

> > + command=self.left.yview)

> >

> > + self.left.configure(yscrollcommand=self.tree_scroll.set)

> >

> > + self.left.bind("<<TreeviewSelect>>", self.on_config_page_select_change)

> >

> > + self.left.bind("<Enter>", lambda e: self.in_left.set(True))

> >

> > + self.left.bind("<Leave>", lambda e: self.in_left.set(False))

> >

> > + self.left.bind("<MouseWheel>", self.on_tree_scroll)

> >

> > +

> >

> > + self.left.pack(side='left',

> >

> > + fill=tkinter.BOTH,

> >

> > + expand=True,

> >

> > + padx=(5, 0),

> >

> > + pady=pady)

> >

> > + self.tree_scroll.pack(side='right', fill=tkinter.Y,

> >

> > + pady=pady, padx=(0, 5))

> >

> > +

> >

> > + frame_right = ttk.Frame(paned, relief="groove")

> >

> > + self.frame_right = frame_right

> >

> > +

> >

> > + self.conf_canvas = tkinter.Canvas(frame_right, highlightthickness=0)

> >

> > + self.page_scroll = ttk.Scrollbar(frame_right,

> >

> > + orient="vertical",

> >

> > + command=self.conf_canvas.yview)

> >

> > + self.right_grid = ttk.Frame(self.conf_canvas)

> >

> > + self.conf_canvas.configure(yscrollcommand=self.page_scroll.set)

> >

> > + self.conf_canvas.pack(side='left',

> >

> > + fill=tkinter.BOTH,

> >

> > + expand=True,

> >

> > + pady=pady,

> >

> > + padx=(5, 0))

> >

> > + self.page_scroll.pack(side='right', fill=tkinter.Y,

> >

> > + pady=pady, padx=(0, 5))

> >

> > + self.conf_canvas.create_window(0, 0, window=self.right_grid,

> >

> > + anchor='nw')

> >

> > + self.conf_canvas.bind('<Enter>', lambda e: self.in_right.set(True))

> >

> > + self.conf_canvas.bind('<Leave>', lambda e: self.in_right.set(False))

> >

> > + self.conf_canvas.bind("<Configure>", self.on_canvas_configure)

> >

> > + self.conf_canvas.bind_all("<MouseWheel>", self.on_page_scroll)

> >

> > +

> >

> > + paned.add(frame_left, weight=2)

> >

> > + paned.add(frame_right, weight=10)

> >

> > +

> >

> > + style = ttk.Style()

> >

> > + style.layout("Treeview", [('Treeview.treearea', {'sticky': 'nswe'})])

> >

> > +

> >

> > + menubar = tkinter.Menu(root)

> >

> > + file_menu = tkinter.Menu(menubar, tearoff=0)

> >

> > + file_menu.add_command(label="Open Config YAML file",

> >

> > + command=self.load_from_yaml)

> >

> > + file_menu.add_command(label=self.menu_string[6],

> >

> > + command=self.load_from_bsf_file)

> >

> > + file_menu.add_command(label=self.menu_string[2],

> >

> > + command=self.load_from_fd)

> >

> > + file_menu.add_command(label=self.menu_string[0],

> >

> > + command=self.save_to_bin,

> >

> > + state='disabled')

> >

> > + file_menu.add_command(label=self.menu_string[1],

> >

> > + command=self.load_from_bin,

> >

> > + state='disabled')

> >

> > + file_menu.add_command(label=self.menu_string[3],

> >

> > + command=self.load_from_delta,

> >

> > + state='disabled')

> >

> > + file_menu.add_command(label=self.menu_string[4],

> >

> > + command=self.save_to_delta,

> >

> > + state='disabled')

> >

> > + file_menu.add_command(label=self.menu_string[5],

> >

> > + command=self.save_full_to_delta,

> >

> > + state='disabled')

> >

> > + file_menu.add_command(label="About", command=self.about)

> >

> > + menubar.add_cascade(label="File", menu=file_menu)

> >

> > + self.file_menu = file_menu

> >

> > +

> >

> > + root.config(menu=menubar)

> >

> > +

> >

> > + if len(sys.argv) > 1:

> >

> > + path = sys.argv[1]

> >

> > + if not path.endswith('.yaml') and not path.endswith('.pkl'):

> >

> > + messagebox.showerror('LOADING ERROR',

> >

> > + "Unsupported file '%s' !" % path)

> >

> > + return

> >

> > + else:

> >

> > + self.load_cfg_file(path)

> >

> > +

> >

> > + if len(sys.argv) > 2:

> >

> > + path = sys.argv[2]

> >

> > + if path.endswith('.dlt'):

> >

> > + self.load_delta_file(path)

> >

> > + elif path.endswith('.bin'):

> >

> > + self.load_bin_file(path)

> >

> > + else:

> >

> > + messagebox.showerror('LOADING ERROR',

> >

> > + "Unsupported file '%s' !" % path)

> >

> > + return

> >

> > +

> >

> > + def set_object_name(self, widget, name):

> >

> > + self.conf_list[id(widget)] = name

> >

> > +

> >

> > + def get_object_name(self, widget):

> >

> > + if id(widget) in self.conf_list:

> >

> > + return self.conf_list[id(widget)]

> >

> > + else:

> >

> > + return None

> >

> > +

> >

> > + def limit_entry_size(self, variable, limit):

> >

> > + value = variable.get()

> >

> > + if len(value) > limit:

> >

> > + variable.set(value[:limit])

> >

> > +

> >

> > + def on_canvas_configure(self, event):

> >

> > + self.right_grid.grid_columnconfigure(0, minsize=event.width)

> >

> > +

> >

> > + def on_tree_scroll(self, event):

> >

> > + if not self.in_left.get() and self.in_right.get():

> >

> > + # This prevents scroll event from being handled by both left and

> >

> > + # right frame at the same time.

> >

> > + self.on_page_scroll(event)

> >

> > + return 'break'

> >

> > +

> >

> > + def on_page_scroll(self, event):

> >

> > + if self.in_right.get():

> >

> > + # Only scroll when it is in active area

> >

> > + min, max = self.page_scroll.get()

> >

> > + if not((min == 0.0) and (max == 1.0)):

> >

> > + self.conf_canvas.yview_scroll(-1 * int(event.delta / 120),

> >

> > + 'units')

> >

> > +

> >

> > + def update_visibility_for_widget(self, widget, args):

> >

> > +

> >

> > + visible = True

> >

> > + item = self.get_config_data_item_from_widget(widget, True)

> >

> > + if item is None:

> >

> > + return visible

> >

> > + elif not item:

> >

> > + return visible

> >

> > +

> >

> > + result = 1

> >

> > + if item['condition']:

> >

> > + result = self.evaluate_condition(item)

> >

> > + if result == 2:

> >

> > + # Gray

> >

> > + widget.configure(state='disabled')

> >

> > + elif result == 0:

> >

> > + # Hide

> >

> > + visible = False

> >

> > + widget.grid_remove()

> >

> > + else:

> >

> > + # Show

> >

> > + widget.grid()

> >

> > + widget.configure(state='normal')

> >

> > +

> >

> > + return visible

> >

> > +

> >

> > + def update_widgets_visibility_on_page(self):

> >